Introducing Matic

The world’s most advanced floor cleaner. Your home’s best helper.

Pre-Order Your Matic

Watch Matic take on NYC

Meet the first truly smart home robot.

Matic senses and solves dry and wet messes — no babysitting required.

01

Mopping and vacuuming — Matic does it all.

Matic’s state-of-the-art computer vision senses what’s around it, automatically switching between cleaning modes to get the job done.

Cutting-edge mess and surface detection

Works on all floor surfaces

Auto-toggles between mopping and vacuuming

02

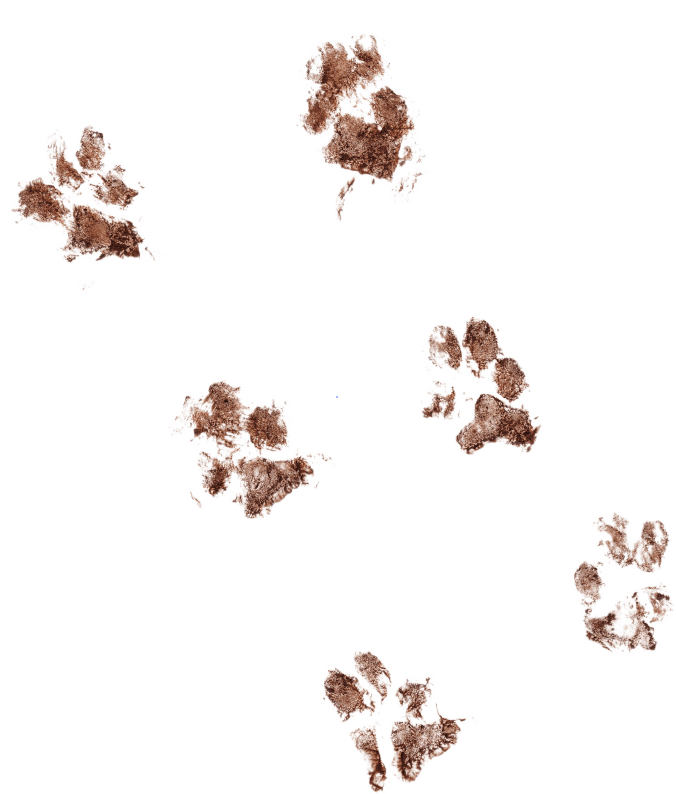

Learns nonstop. Cleans nonstop. No supervision necessary.

Matic’s on patrol 24/7, quietly working around the clock for a continuous clean. Watch Matic because you want to, not because you have to.

Patrols your home with 3D floor mapping

Knows what to clean (and what to avoid)

Quiet enough for work time and nap time

03

“Hey Matic, clean this!”

Matic responds to your voice and gestures. Create a routine in the app or just point and tell Matic where to go. Your house, your rules.

Listens to your voice and recognizes gestures

Easy in-app scheduling

Less time cleaning, more time

Matic saves the average family an hour a day on floor cleaning.

The future of robotics comes home.

Matic was founded by two busy fathers who love technology and hate cleaning. With decades of engineering experience, they’re on a mission to solve everyday problems with remarkable robotics.

Interested in Matic? Work with us

Finally, a floor-cleaning innovation worth celebrating.

Vacuuming

Vacuuming© 2024 Matic. All Rights Reserved.